Running LLMs locally

I've been playing around with Retrieval Augmented Generation (RAG) and actually deciphering the space around running LLMs and getting something set up and running is quite a hassle.

Here are some quick bites of information to get started with running an LLM model locally.

- You can expect a well-performing model running locally that will generate a sentence of text in about 4 seconds.

- This was running on a decent desktop setup (Nvidia 1070 GPU)

- It runs on the CLI, but there are tools that expose a ChatGPT like UI on the browser

Here is an example of an essay the model generated (took 26.6 seconds)

Write an essay on the threat of supply chain attacks.

Title: The Looming Threat of Supply Chain Attacks in Cyberspace

Introduction

The modern world is heavily dependent on complex and interconnected supply chains to deliver goods and services efficiently. While the benefits of these systems are undeniable, they also present a significant vulnerability. One such threat is supply chain attacks, which exploit weak points within the network to compromise critical infrastructure and sensitive data. This essay will discuss the nature of supply chain attacks, their increasing prevalence in cyberspace, and potential mitigation strategies for organizations to safeguard against these malicious intrusions.

I. Understanding Supply Chain Attacks

A supply chain attack is a cyber-attack that targets the vulnerabilities within a system's supply chain. By exploiting weak links in the network, such as software developers, hardware manufacturers, or third-party vendors, adversaries can gain unauthorized access to an organization's sensitive data and critical infrastructure. These attacks are often carried out by inserting malicious components into the supply chain or manipulating existing ones, thereby compromising the security of the entire system.

II. The Prevalence of Supply Chain Attacks in Cyberspace

As globalization continues to increase interdependencies among nations and organizations, the risk of supply chain attacks has become an increasingly pressing concern. High-profile cases such as Stuxnet, which targeted Iran's nuclear program by exploiting a vulnerability in the Windows operating system, or the SolarWinds attack, which compromised software updates for hundreds of organizations worldwide, demonstrate the devastating potential of these threats. The growing prevalence of supply chain attacks highlights the need for proactive measures to mitigate their impact and protect critical infrastructure.

III. Consequences of Supply Chain Attacks

The effects of supply chain attacks can be far-reaching and damaging, with both short-term and long-term consequences. Organizations may face significant financial losses due to theft, data breaches, or system downtime. Additionally, the loss of public trust in an organization's ability to protect sensitive information can lead to reputational damage and a potential decline in business. Furthermore, these attacks can have severe geopolitical implications, as seen in the case of SolarWinds, where nation-states targeted various sectors, including defense, energy, finance, and technology.

IV. Mitigation Strategies for Supply Chain Attacks

To combat supply chain attacks, organizations must adopt a comprehensive approach that includes the following strategies:

1. Risk Assessment: Conduct regular risk assessments of third-party vendors and suppliers to identify potential vulnerabilities in the supply chain.

2. Due Diligence: Perform thorough due diligence on prospective partners, ensuring they adhere to strict security standards and have a strong track record in cybersecurity.

3. End-to-End Encryption: Implement end-to-end encryption for data transmission and storage, minimizing the risk of unauthorized access.

4. Secure Software Development Life Cycle (SDLC): Incorporate security measures throughout the software development life cycle, from design to implementation and maintenance.

Getting things set up

There are quite a number of ways that you can use to run a model, ranging from just installing a tool to writing and running code.

The tool I'm using is Ollama, which has a very simple interface and runs natively on Windows, Linux and Mac (so no troubleshooting with weird OS specific issues).

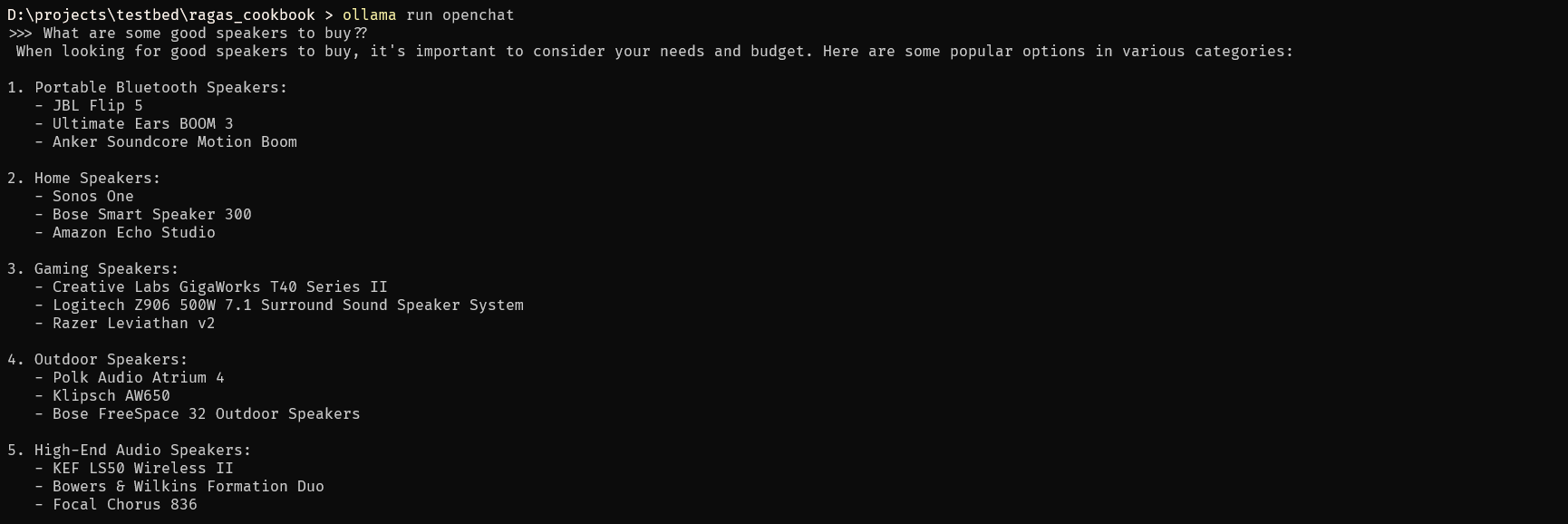

Once downloaded, you can run the command Ollama in your terminal. The Ollama CLI has a very nice and simple interface. Only two commands are needed:

ollama pull <model_name>

ollama run <model_name>

Using a new model is as simple as running these two commands in sequence, and the simplicity is really nice.

It also exposes a server running on http://localhost:11434. This allows you to run scripts and other frameworks with Ollama, without much hassle.

Docs and the API spec are available here.

Model recommendation

I'm currently running the OpenChat model. It has pretty good performance (especially important when running locally and not with beefy GPUs/TPUs on the cloud.)

Ollama has nice documentation for each of the models it makes available.

The model seems to benchmark well against other models.

From HuggingFace:

Generic models:

OpenChat: based on LLaMA-13B (2048 context length)

🚀 105.7% of ChatGPT score on Vicuna GPT-4 evaluation

🔥 80.9% Win-rate on AlpacaEval

Running with a UI

While I personally don't need to run it with a UI for what I'm using it for, Ollama comes with what looks like a great web-based UI. Their main recommendation is to run it as a docker container.

Hope this helps!